Interactive Soft Tissue for Surgical Simulation

My thesis was the research and development of new tech for the simulation of surgically interactive virtual tissues. It includes haptic-rendering (making it into something you can touch/feel), novel medical training simulation applications, and reviews of the related tech, hardware, and the research area more generally.

Author: Greg S. Ruthenbeck. Download thesis as a PDF (from ResearchGate, 4MB).

Abstract

Medical simulation has the potential to revolutionize the training of medical practitioners. Advantages include reduced risk to patients, increased access to rare scenarios and virtually unlimited repeat-ability. However, in order to fulfill its potential, medical simulators require techniques to provide realistic user interaction with the simulated patient. Specifically, compelling real-time simulations that allow the trainee to interact with and modify tissues, as if they were practicing on real patients.

A key challenge when simulating interactive tissue is reducing the computational processing required to simulate the mechanical behavior. One successful method of increasing the visual fidelity of deformable models while limiting the complexity of the mechanical simulation is to bind a coarse mechanical simulation to a more detailed shell mesh. But even with reduced complexity, the processing required for real-time interactive mechanical simulation often limits the fidelity of the medical simulation overall. With recent advances in the programmability and processing power of massively parallel processors such as graphics processing units (GPUs), suitably designed algorithms can achieve significant improvements in performance.

This thesis describes an ablatable soft-tissue simulation framework, a new approach to interactive mechanical simulation for virtual reality (VR) surgical training simulators that makes efficient use of parallel hardware to deliver a realistic and versatile interactive real-time soft tissue simulation for use in medical simulators.

Examiner Feedback

“In his PhD. thesis Mr. Ruthenbeck worked on sophisticated scientific and technical tasks related to efficient surgery simulation frameworks and his thesis comprises contributions to various aspects of this research area.“

“Mr. Ruthenbeck proceeded very carefully and skillfully in the development of the approaches presented in his thesis. He showed a thorough background in the related research areas.”

“The presented thesis constitutes a valuable contribution to the field of interactive surgery simulators”.

Table of Contents

Chapter 1. Introduction

1.1 Thesis Aims

1.2 Thesis Outline

Chapter 2. Virtual Reality for Medical Training

2.1 Learning Modalities

2.2 VR Medical Simulations: The State of the Art

Chapter 3. Simulator Development Tools

3.1 Software Tools

3.2 Literature Surveys

3.3 Recent Advances in Parallel Computing Hardware

3.4 Conclusion

Chapter 4. A New Tissue Simulation Framework

4.1 The Tissue Simulation Framework

4.2 System Overview

Chapter 5. Mechanical Simulation

5.1 Background

5.2 Cubic Rotational Mass Springs: A New Approach

5.3 Demonstration

5.4 Extensibility

Chapter 6. Interactive Marching Tetrahedra

6.1 A Review of Marching Algorithms

6.2 Marching Tetrahedra

6.3 Interactive Marching Tetrahedra: A New Approach

6.4 Demonstration

Chapter 7. Integration of Components

7.1 Deforming the IMT Mesh

7.2 Cuts and the Coupled System

7.3 Collisions

7.4 Demonstration

Chapter 8. Haptics

8.1 6DOF Haptics

8.2 Desktop Haptic Devices

8.3 Haptics APIs

8.4 Haptic Rendering

8.5 Haptics in the TSF

8.6 Demonstration

Chapter 9. Applications

9.1 An Endoscopic Sinus Surgery Simulator

9.2 ISim: An Endotracheal Intubation Simulator

9.3 A Coblation Tonsillectomy Simulator

Chapter 10. Conclusion

10.1 Future Directions

10.2 Final Words

Bibliography

Appendix A: Mesh Coupler C++ Code Listing

Appendix B: An Earlier Version of ISim

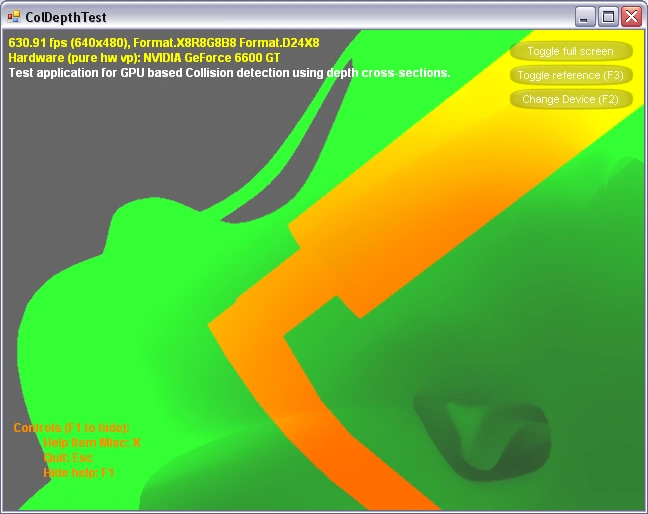

B.1 Image-based Collision Detection and Deformation

Appendix B.1 Image-based Collision Detection

A novel method for collision detection, haptic rendering, and deformation, using the intersection of depth buffers (see screen-shot below). This approach basically uses the graphics rendering pipeline to do complex intersection tests (collision detection) instead of conventional collision detection, deformation, and haptic rendering.